Using virtual worlds to train an object detector for personal protection equipment

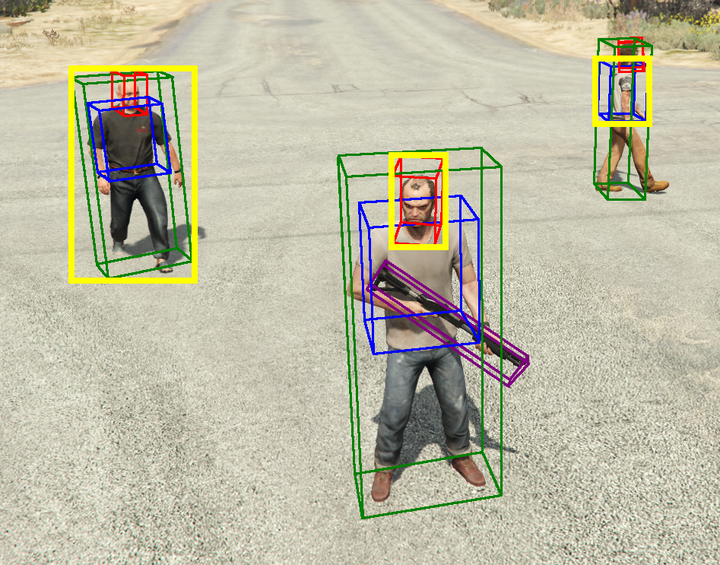

Bounding Boxes generated by V-DAENY

Bounding Boxes generated by V-DAENY

Abstract

Neural Networks are known to be an effective technique in the field of Artificial Intelligence and in particular in the field of Computer Vision. Their main advantage is that they can learn from examples, without the need to program into them any previous expertise or knowledge.

Recently, Deep Neural Networks have seen many successful applications, thanks to the huge amount of data that has steadily become more and more available with the growth of the internet. When annotations are not already available, images must be manually annotated introducing costs that can possibly be very high. Furthermore, in some contexts also gathering valuable images could be impractical for reasons related to privacy, copyright and security.

To overcome these limitations the research community has started to take interest in creating virtual environments for the generation of automatically annotated training samples. In previous literature, using a graphics engine for augmenting a training dataset has been proven a valid solution.

In this work, we applied the virtual environment to approach to a not yet considered task: the detection of personal protection equipment. The first contribution is V-DAENY, a plugin for GTA-V, a famous videogame. V-DAENY allows the creation of scenario with the possibility of customizing most aspects of it: number of people, their equipment and behavior, weather conditions and time of day. With V-DAENY, we automatically generated over 140,000 annotated images in several locations of the game map and with different weather conditions.

The second contribution are two different datasets composed of real images, that can be used for training and testing. One of them contains images with copyright limits, while the second contains only copyright free images. Both datasets contain pictures taken in various contexts, such as airports, building sites and military sites.

The third contribution is the evaluation of the performances achieved by learning with virtual data. We trained a network starting from a pre-trained YOLOv3 detector and applying a phase of Transfer Learning with virtual data and a phase of Domain Adaptation with a small amount of the manually annotated real dataset. Then, we tested the network on the other part of the real dataset. The network trained with this approach achieves promising performances. After being trained with only virtual data, the network achieves excellent precision on virtual data and good precision on real data. After applying Domain Adaptation, the network achieves high precision on both real and virtual data. As comparison, applying only Domain Adaptation to base YOLOv3 achieves a precision similar to that obtained when training with only virtual data. These results suggest that computer generated training samples can replace most of the real dataset and still achieve very good results.